Calibration Curve-fitting

Overview

Finally, we get into the core of calibration, the curve-fitting step, again using the O’Connell’s ELISA data. This first example of curve-fitting and derivation of the inverse model is long because the steps in the analysis are explained in greater detail than in later examples.

Learning objectives for this section:

- Fit a calibration curve to one run of data using nonlinear regression (4PL):

- specify non-linear calibration curve functions in R;

- specify a weighting function that reflects change in variance with concentration (RER);

- specify initial values for the parameters of the calibration curve;

- use weighted and unweighted residual plots to evaluate model fit;

- approximate iteratively reweighted least squares (IRLS) regression.

- specify non-linear calibration curve functions in R;

- Calculate the inverse function of the calibration curve and:

- return predicted concentrations;

- estimate the variance of the predicted concentration.

- return predicted concentrations;

Basic curve-fitting

First we reload and examine the O’Connell ELISA data:

# O'Connell ELISA data

ocon <- read.csv(file.path(data.path, "ocon.csv"), header = TRUE)

head(ocon)## conc rep od

## 1 0 1 0.100

## 2 0 1 0.120

## 3 3 1 0.120

## 4 8 1 0.120

## 5 23 1 0.130

## 6 69 1 0.153# Plot the O'Connell data

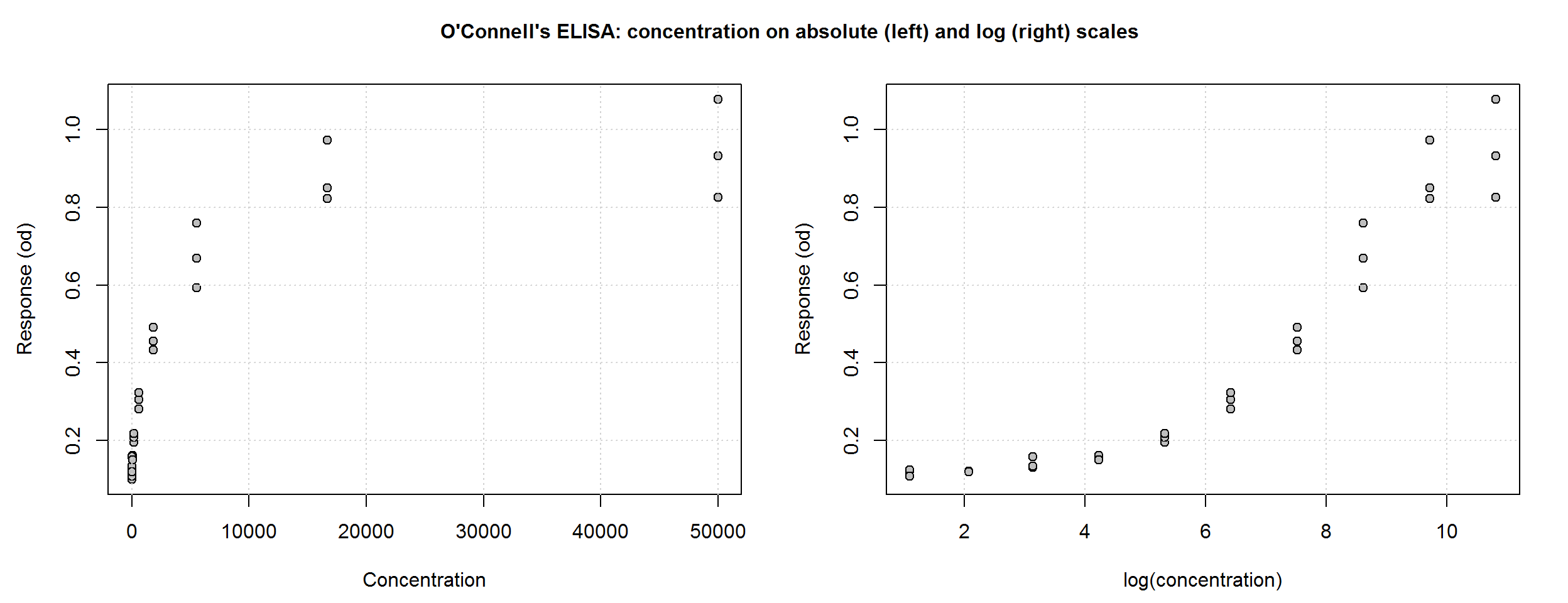

par(mfrow = c(1, 2), cex.main = 1, mar = c(4, 4, 1, 2), oma = c(0.5, 0.5, 2.5, 0))

plot(ocon$conc, ocon$od, pch = 21, bg = "grey", ylab = "Response (od)",

xlab = "Concentration")

grid()

# Plot on the log(x) scale

plot(log(ocon$conc), ocon$od, pch = 21, bg = "grey", ylab = "Response (od)",

xlab = "log(concentration)")

grid()

title("O'Connell's ELISA: concentration on absolute (left) and log (right) scales",

outer = T)

par(mfrow = c(1, 1))Unweighted nonlinear regression in R

Unlike linear regression, nonlinear regression models are fit using an iterative algorithm, which requires starting values for each unknown parameter. Based on the plot above and the interpretation of the parameters of the 4PL function, we can visually estimate reasonable starting values. Given the simple structure of the OConnell data and the strong relationship between concentration and response, starting values do not need to be very accurate.

The mean of the zero-calibrators, 0.1, is a good starting value for parameter \(d\). The upper asymptote, \(a\), looks like it’s going off to a limit of about 1. The \(b\) parameter is harder to imagine, but for an ascending curve, a negative number is needed; -1 will do. From the log(concentration) plot, \(c\), the inflection point, looks like it may be around 8 so on the absolute scale that is 2981.

Let’s enter into R all the components for 4PL curve-fitting:

# ------------ Function: 4PL curve function ---------------------------------

# We actually loaded it ealier with source("../../AMfunctions.R"),

# but this is what it looks like

M.4pl <- function(x, small.x.asymp, inf.x.asymp, inflec, hill){

f <- small.x.asymp + ((inf.x.asymp - small.x.asymp)/

(1 + (x / inflec)^hill))

return(f)

}

# ------------- end ---------------------------------------------------------

# Create a vector with the starting values

# (The order of the parameters is important. It determines the list index number in later functions.)

start.ocon <- c(small.x.asymp = 0.1, inf.x.asymp = 1, inflec = 3000, hill = -1)Let’s fit an unweighted 4PL curve first:

uw.4pl <- nlsLM(od ~ M.4pl(conc, small.x.asymp, inf.x.asymp, inflec, hill),

data = ocon,

start = start.ocon)

summary(uw.4pl)##

## Formula: od ~ M.4pl(conc, small.x.asymp, inf.x.asymp, inflec, hill)

##

## Parameters:

## Estimate Std. Error t value Pr(>|t|)

## small.x.asymp 0.11767 0.01231 9.561 6.73e-11

## inf.x.asymp 1.04351 0.05440 19.184 < 2e-16

## inflec 3288.68616 650.10993 5.059 1.68e-05

## hill -0.84729 0.10216 -8.294 1.78e-09

##

## Residual standard error: 0.04563 on 32 degrees of freedom

##

## Number of iterations to convergence: 5

## Achieved convergence tolerance: 0.0000000149Interpretation of regression output:

Formulareminds us of the model we specified.Parameterslists the final point estimate and standard error for each parameter.Residual standard erroris the square root of MSE, the unexplained variance.Convergence information: Notice that although our starting values were not very accurate, the model converged in only 5 iterations. Sometimes with more complex data structures or with sparse data sets, the algorithm will reach a built-in limit of iterations and we will need to change that limit, choose different starting values or treat some of the parameters as known. We will do this in the “R’s ELISA{gtools}” curve-fitting tutorial.

What we’re looking for in a regression model (in order of importance):

Convergence.

Do the parameter estimates look reasonable?

No model assumption violations: From the residual plots (weighted residuals for weighted models), are the residuals evenly distributed around zero line?

Remember: R^2 and F-tests are not very meaningful with such highly correlated data i.e. known to have a strong relationship between x and y (see the Background).

Using the output for our unweighted nonlinear model, let’s interpret the model using the internal criteria listed above:

- Convergence.

The algorithm converged.

- Do the parameter estimates look reasonable?

The parameter values have the expected sign and are in plausible ranges.

- No model assumption violations…

We can’t answer this with the numerical summary output. Two graphical tools that can help verify regression assumptions are the fitted regression curve and the curve’s standardised residuals. Standardised residuals are the residuals scaled to a normal distribution with standard deviation of 1. The distribution of the residuals can then more easily be judged unacceptably large or small. We wrote a wrapper function, plotDiag to facilitate this:

# plotDiag():

# Enter nlsLM model and overall plot title

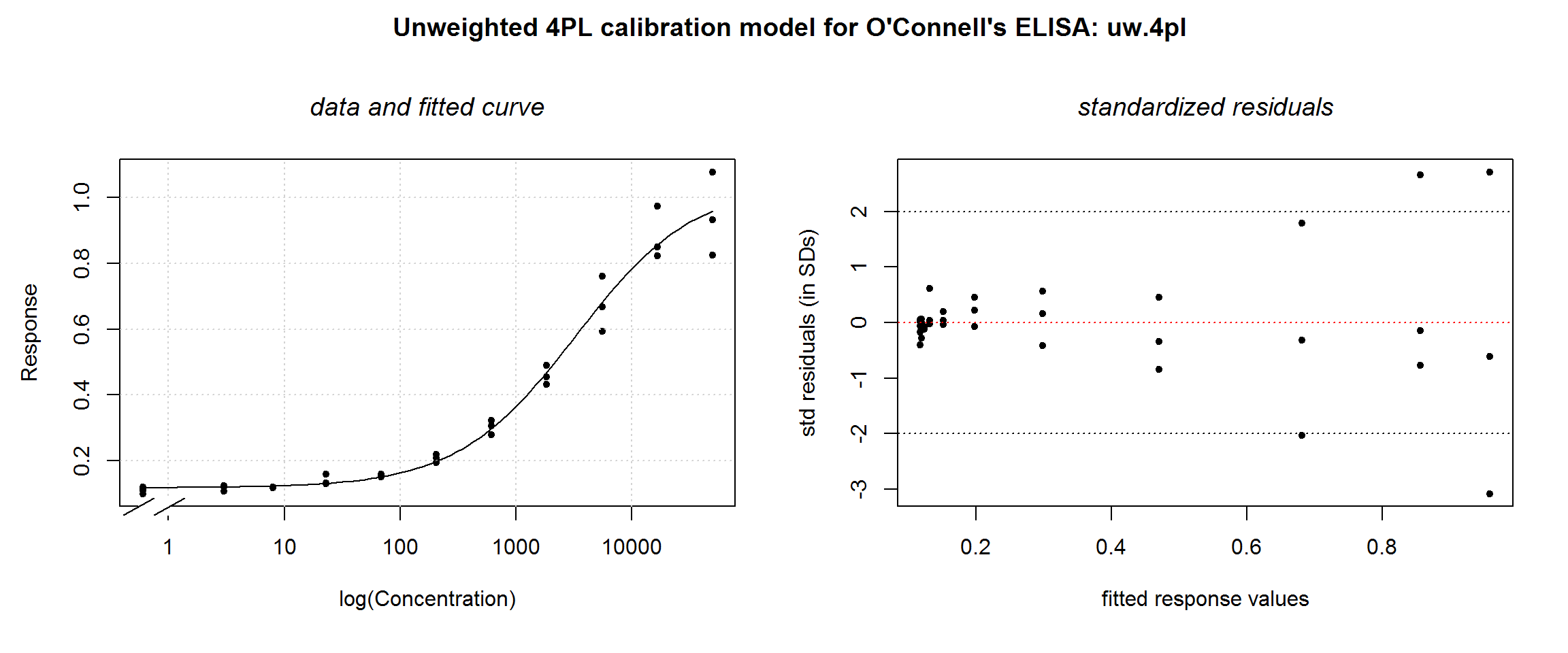

plotDiag.nls(uw.4pl, "Unweighted 4PL calibration model for O'Connell's ELISA: uw.4pl")

- No model assumption violations: From the residual plots (weighted residuals for weighted models), are the residuals evenly distributed around zero line?

The fitted curve looks reasonable, but the residual plot illustrates the violation of the homoscedasticity assumption. This indicates that a weighted model is necessary.

Basic weighted curve-fitting

One approach to modeling non-constant variance in a regression model is by assigning a weight to each observation such that the weights are inversely proportional to the variance. As discussed in the previous section, we typically notice an increasing association between the observed response and the response variance. We chose to model this using the power function, \(Var(y|x) = a*E(y|x)^{theta}\), though other functions are possible. Note that \(E(y|x)\) is the predicted value of the response (y) at a given concentration (x). To fit a weighted model within R, we use the weights argument in the nlsLM function. Recall from in the previous section that the best estimate for \(theta\) was 3.

# Weighted 4PL

# Theta for weighting (from 'Characterising variance')

theta.ocon <- 3

y.curve <- predict(uw.4pl)

w.4pl <- nlsLM(od ~ M.4pl(conc, small.x.asymp, inf.x.asymp, inflec, hill),

data = ocon,

start = start.ocon,

weights = (1 / y.curve^theta.ocon)

)

summary(w.4pl)##

## Formula: od ~ M.4pl(conc, small.x.asymp, inf.x.asymp, inflec, hill)

##

## Parameters:

## Estimate Std. Error t value Pr(>|t|)

## small.x.asymp 0.112907 0.002115 53.383 < 2e-16

## inf.x.asymp 1.131557 0.120940 9.356 1.13e-10

## inflec 4501.949633 1569.162177 2.869 0.00723

## hill -0.738109 0.050578 -14.593 1.07e-15

##

## Residual standard error: 0.1534 on 32 degrees of freedom

##

## Number of iterations to convergence: 6

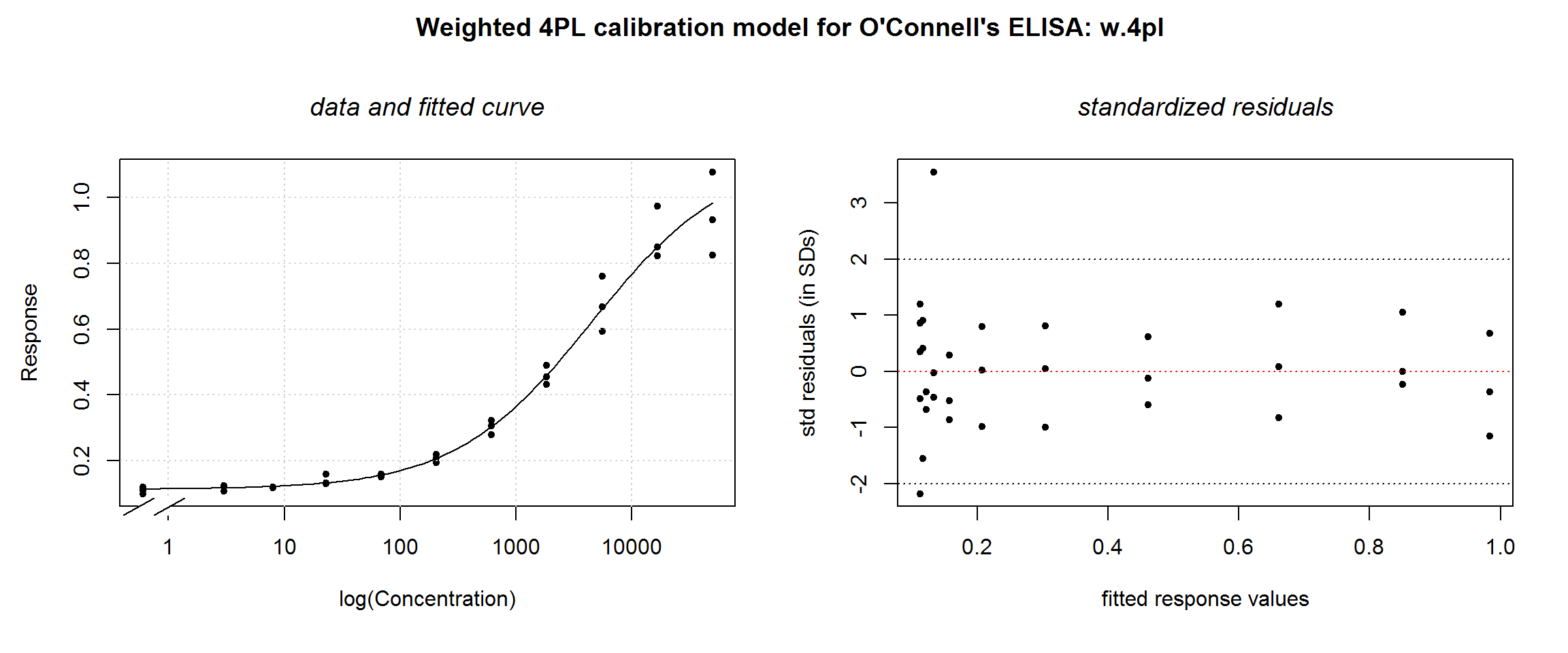

## Achieved convergence tolerance: 0.0000000149plotDiag.nls(w.4pl, "Weighted 4PL calibration model for O'Connell's ELISA: w.4pl")

The fitted curve hardly changes, but the residuals look much better. Depending on the outlier criterion you use, one data point (for concentration = 23) may be considered an outlier. We prefer not to exclude borderline questionable points, however—especially when there are so few replicates.

In the original O’Connell tutorial, their modeling algorithm settles on a different value for theta: 2.4; that is, 2 x 1.2 because they use a parameterization in terms of standard deviation rather than variance (1993, 110). Let’s see how a different \(theta\) value affects residuals:

# A different theta, 2.4 (from the O'Connell article)

theta.ocon.lit <- 2.4

w.lit.4pl <- nlsLM(od ~ M.4pl(conc, small.x.asymp, inf.x.asymp, inflec, hill),

data = ocon,

start = start.ocon,

weights = (1 / y.curve^theta.ocon.lit)

)

summary(w.lit.4pl)##

## Formula: od ~ M.4pl(conc, small.x.asymp, inf.x.asymp, inflec, hill)

##

## Parameters:

## Estimate Std. Error t value Pr(>|t|)

## small.x.asymp 0.113199 0.002444 46.326 < 2e-16

## inf.x.asymp 1.111586 0.083880 13.252 1.54e-14

## inflec 4189.402732 1117.980807 3.747 0.000708

## hill -0.750911 0.048126 -15.603 < 2e-16

##

## Residual standard error: 0.09675 on 32 degrees of freedom

##

## Number of iterations to convergence: 6

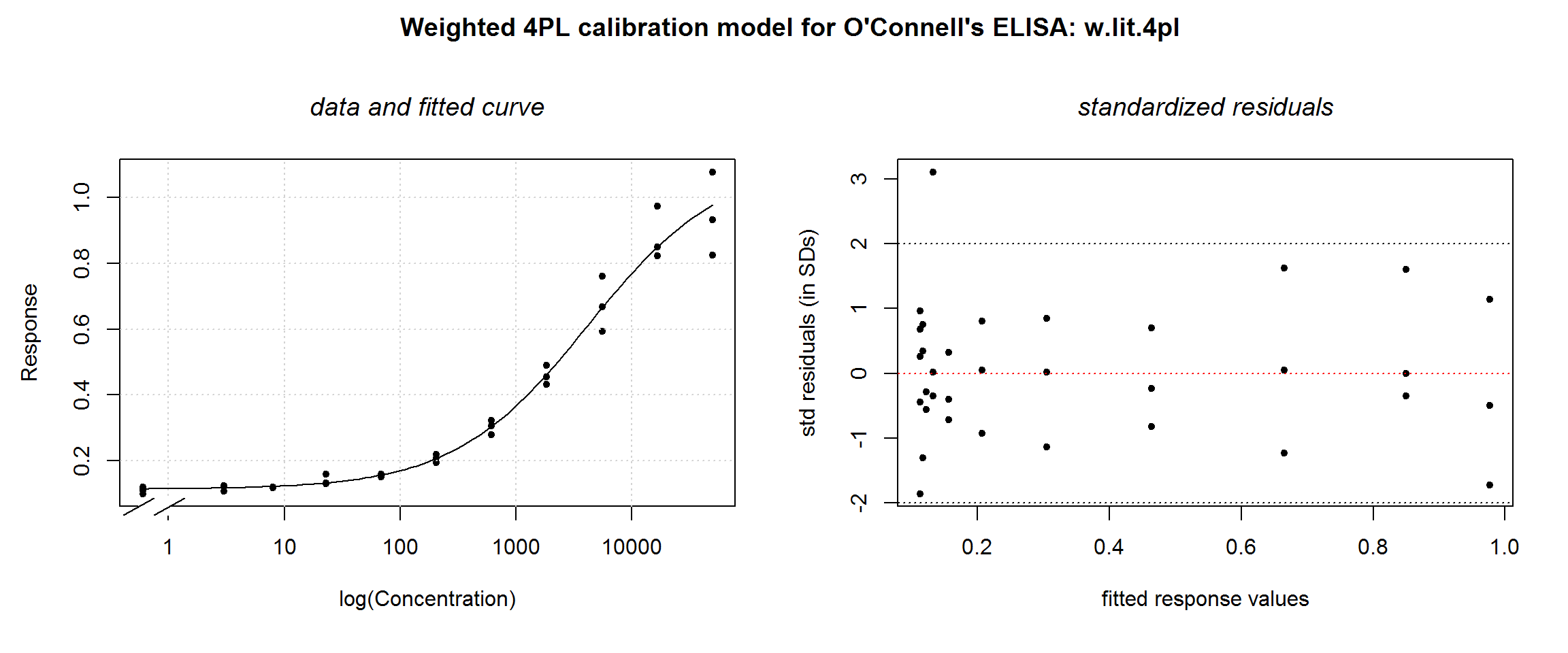

## Achieved convergence tolerance: 0.0000000149plotDiag.nls(w.lit.4pl, "Weighted 4PL calibration model for O'Connell's ELISA: w.lit.4pl")

Again, no visible difference in the fitted curve, but the weighted residuals plot changes a little. At this slightly lower value of \(theta\), there seems to still be some increase in the residuals at high response values. A larger data set would be needed to make a definitive choice.

# What are the parts of the model object and summary object

summary(w.4pl)

weights(w.4pl)

mean(weights(w.4pl))

sum(weights(w.4pl))

# Unweighted residuals

resid(w.4pl)

# Unweighted sum of squares - use to compare models

sum(resid(w.4pl)^2)

# Unweighted MSE

sum(resid(w.4pl)^2)/summary(w.4pl)$df[[2]]

# Unweighted RSE

sqrt(sum(resid(w.4pl)^2)/summary(w.4pl)$df[[2]])

# Weighted residuals - use this for plotting

summary(w.4pl)$resid

# Same as

sqrt(weights(w.4pl))*resid(w.4pl)

# Weighted sum of squares

sum(summary(w.4pl)$resid^2)

# RSE (sigma) - weighted

summary(w.4pl)$sigmaIteratively reweighted least squares algorithm

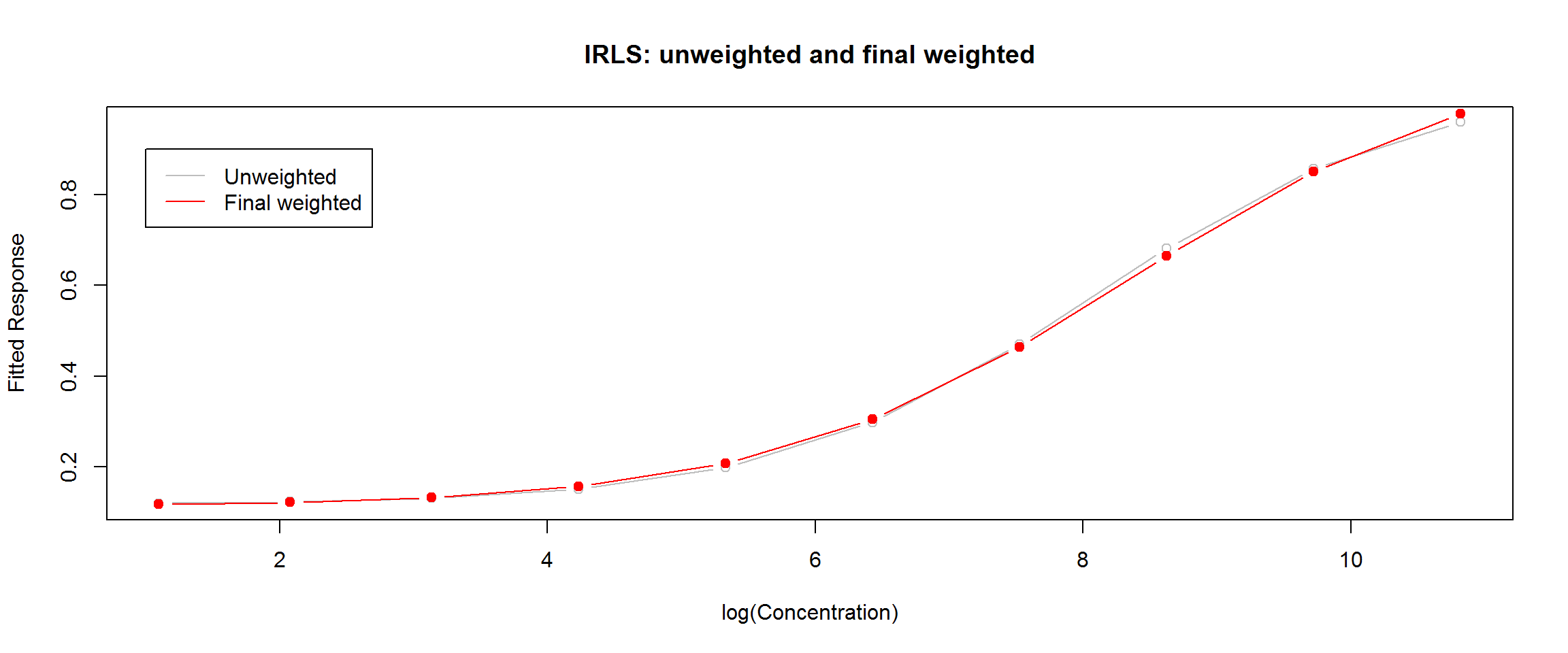

As we have seen, weighting has a very small effect on the fitted regression curve with these data, but a big effect on the error model. Therefore, we expect the error model may be sensitive to the representation of \(y\) in the denominator of the weight function, as well as to \(theta\). Generally the raw response values are not used. One could use mean response by calibrator group or the response predicted by the unweighted model. Iteratively reweighted regression goes a step further and repeats the cycle until the changes in weighted sum of squares fall below some threshold.

We have written our own approximation of an iteratively reweighted least squares (IRLS) algorithm as function, IRLS, and a wrapper to display the results, summaryIRLS:

# Use new function IRLS.4pl()

# Provide data.frame, theta (using O'Connell's here), and start values

w2.4pl <- IRLS.4pl(df = ocon, theta = theta.ocon.lit, start = start.ocon)

summaryIRLS(w2.4pl)##

## The unweighted model:

## ---------------------------------------------------

## The weighted sum of squares was stable after 3 cycles

##

## ---------------------------------------------------

## The final model:

# A slightly different version of plotDiag.nls() (used earlier)

plotDiag.irls(w2.4pl, "IRLS 4PL calibration model for O'Connell's ELISA: w2.4pl")

The GraphPad results are shown below. Remember that GraphPad does not include the zero-calibrator data, which may account for some differences in the results.

The parameter point estimates are virtually the same. Notice, like in O’Connell, GraphPad uses a parameterisation that keeps the ‘Hill Slope’, or \(b\), positive. For comparison on the inflection point, \(c\), look at the ‘IC50’ values in GraphPad output (which they have transformed back from the estimated parameter, ‘LogIC50’). R’s standard errors are slightly narrower, but this is probably due to the slightly larger sample size that comes from including the zero-calibrators.

Let’s get some of the other statistics from our w2.4pl$end.model to compare with GraphPad output:

# 95% confidence intervals for the parameters

print(confint(w2.4pl$end.model), digits = 4)## Waiting for profiling to be done...## 2.5% 97.5%

## small.x.asymp 0.1082 0.1179

## inf.x.asymp 0.9673 1.3203

## inflec 2611.1074 7781.8225

## hill -0.8557 -0.6593# Weighted sum of squares

print(sum(summary(w2.4pl$end.model)$resid^2), digits = 3)## [1] 0.301# Sigma (RSE) - same as O'Connell's sigma in results on Fig 10

print(summary(w2.4pl$end.model)$sigma, digits = 3)## [1] 0.097Following from the SEs, R’s 95% confidence intervals are slightly narrower, but essentially the same.

R’s weighted sum of squares is larger because we have more of data points contributing to the sum of squares. Unlike weighted MSE, WSS is not averaged over the degrees of freedom.

Residual Standard Error (R’s

sigmaand GraphPad’s ‘Sy.x’) are virtually the same.

Could re-run the algorithm using O’Connell’s theta instead

Not run:

# Using O'Connell's theta

w2.lit.ocon <- IRLS.4pl(df = ocon, theta = theta.ocon.lit, start = start.ocon)

summaryIRLS(w2.lit.ocon)

plotDiag.irls(w2.lit.ocon, "IRLS 4PL calibration model for O'Connell's ELISA: w2.lit.ocon")Satisfied that our R analysis replicates the results from GraphPad and the O’Connell article, we can move on to the inverse function.

The inverse function and error model

Obtaining the inverse function of the 4PL function, being monotonic, is trivial. As given by Dunn and Wild (2013, 327)

\[x = c \Big(\frac{a-d}{y-d} -1 \Big)^{1/b}\]

The error model for the inverse function is difficult, however. The most common methods for approximating the standard deviation of the predicted concentration are Wald and inversion intervals [ref]. O’Connell and colleagues recommend a ‘Wald-type’ interval based on [second order] Taylor series expansion (1993).

The sdXhat function returns a data frame of triplets: a range of response values (yp), corresponding predicted concentration (xp) and the standard deviation of the predicted concentration (sd.xp).

# Don't forget to include the desired theta value

# (other arguments could be left as function defaults)

ocon.inv.theta.2.4 <- sdXhat.4pl(w2.4pl, theta = theta.ocon.lit)

head(ocon.inv.theta.2.4$inv.grid, 15) ## yp xp sd.xp

## 1 0.1131505 0.00050 0.494705

## 2 0.1253619 12.05155 6.717273

## 3 0.1335261 24.10260 8.922721

## 4 0.1405745 36.15364 10.832795

## 5 0.1469594 48.20469 12.589745

## 6 0.1528776 60.25574 14.246460

## 7 0.1584386 72.30679 15.831467

## 8 0.1637116 84.35783 17.362970

## 9 0.1687442 96.40888 18.853693

## 10 0.1735711 108.45993 20.313039

## 11 0.1782185 120.51098 21.748218

## 12 0.1827070 132.56203 23.164906

## 13 0.1870532 144.61307 24.567660

## 14 0.1912707 156.66412 25.960191

## 15 0.1953707 168.71517 27.345558# Compare lines 8 and 9 to O'Connell LOD (p.)

# Since cv = sd/mean, if cv = 0.2 and mean = 88.72 then

# sd = 0.2 x 88.72 = 17.74.With this output, a simple program can then retrieve (sometimes with addtional, but simple calculations):

[because it is more portable]

the predicted concentration for new response observations;

confidence or prediction intervals for the predicted concentration;

precision profile (based on coefficient of variation);

predictions for the working range (or Analytical Measurement Range) such as limits of detection, quantification.

Therefore, let’s save the grid (and the data and model) for the next tutorial:

save(ocon.inv.theta.2.4, file = "ocon.inv.theta.2.4.RData")

ocon.model.theta.2.4 <- w2.4pl[c(1,4)]

save(ocon.model.theta.2.4, file = "ocon.model.theta.2.4.RData")Summary

In this tutorial, we have fit a calibration curve to the O’Connell ELISA data using iteratively reweighted least squares and achieved virtually the same results as the published analysis and GraphPad. We also derived the inverse model from which we can estimate measures of expected performance in the next O’Connell ELISA tutorial.

For more curve-fitting practice, the (streamlined) procedure is repeated with the R’s ELISA data (coming soon…). In that tutorial, you will hopefully see that what may have felt like a long, complicated analysis, really isn’t too bad.

References

Dunn, John R, and David Wild. 2013. “Calibration Curve Fitting.” In The Immunoassay Handbook, 4th ed., 323–37. Elsevier. doi:10.1016/B978-0-08-097037-0.00013-0.

O’Connell, M.a., B.a. Belanger, and P.D. Haaland. 1993. “Calibration and assay development using the four-parameter logistic model.” Chemometrics and Intelligent Laboratory Systems 20 (2): 97–114. doi:10.1016/0169-7439(93)80008-6.